Deep Multimodal Speaker Naming

Yongtao Hu1, 2 Jimmy SJ. Ren3 Jingwen Dai4 Chang Yuan2 Li Xu3 Wenping Wang1

1 The University of Hong Kong, Hong Kong 2 Lenovo Group Limited, Hong Kong 3 SenseTime Group Limited, Hong Kong 4 Xim Industry Inc., Guangzhou

The 23rd Annual ACM International Conference on Multimedia (ACM MM 2015)

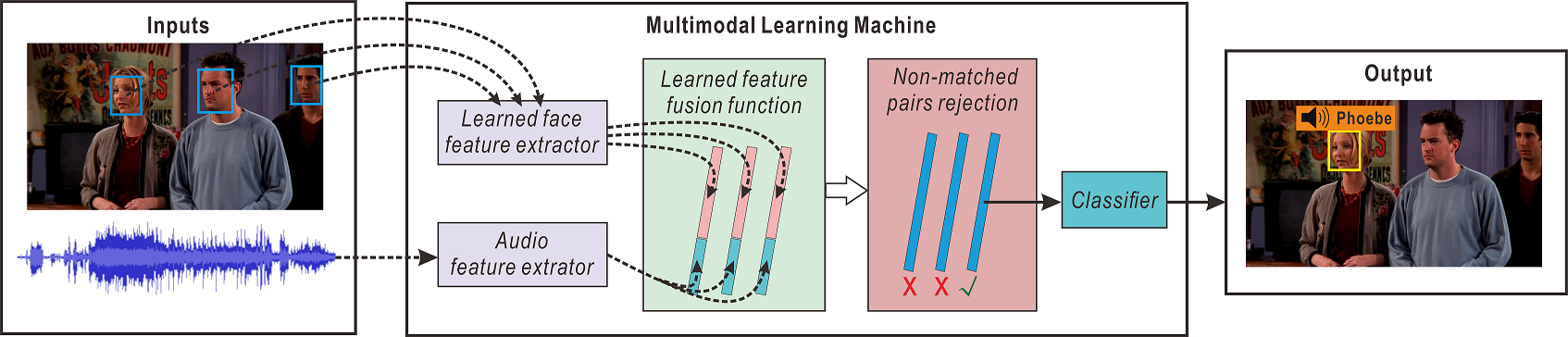

Multimodal learning framework for speaker naming.

Abstract

Automatic speaker naming is the problem of localizing as well as identifying each speaking character in a TV/movie/live show video. This is a challenging problem mainly attributes to its multimodal nature, namely face cue alone is insufficient to achieve good performance. Previous multimodal approaches to this problem usually process the data of different modalities individually and merge them using handcrafted heuristics. Such approaches work well for simple scenes, but fail to achieve high performance for speakers with large appearance variations. In this paper, we propose a novel convolutional neural networks (CNN) based learning framework to automatically learn the fusion function of both face and audio cues. We show that without using face tracking, facial landmark localization or subtitle/transcript, our system with robust multimodal feature extraction is able to achieve state-of-the-art speaker naming performance evaluated on two diverse TV series. The dataset and implementation of our algorithm are publicly available online.

Downloads

- Paper (PDF, 1.2 MB)

- Poster (PDF, 1.4 MB)

- Dataset (Multimodal Face+Audio Dataset)

- Source code (MATLAB, hosted on GitLab)

Bibtex

@inproceedings{hu2015deep,

title={{Deep Multimodal Speaker Naming}},

author={Hu, Yongtao and Ren, Jimmy SJ. and Dai, Jingwen and Yuan, Chang and Xu, Li and Wang, Wenping},

booktitle={Proceedings of the 23rd Annual ACM International Conference on Multimedia},

pages={1107--1110},

year={2015},

organization={ACM}

}